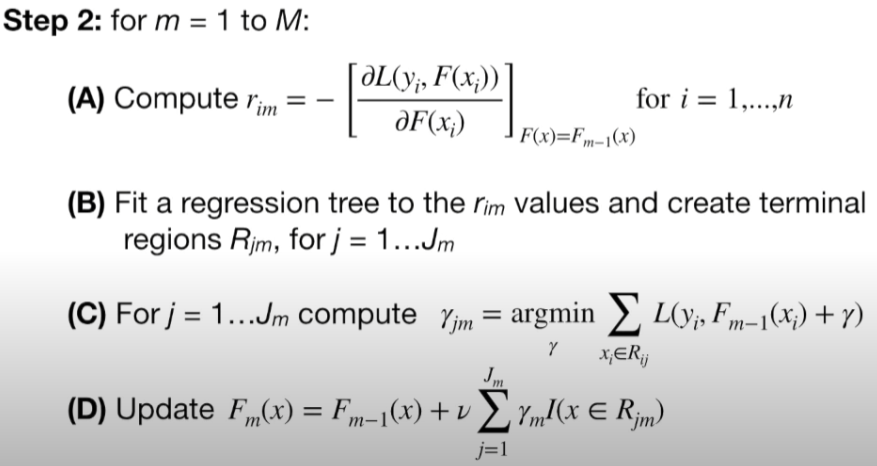

기말고사 공부 할 겸 Gradient Boosting을 바닥부터 구현해 보았다.

나는 이해하지 못하면 암기를 못하는 편이다...

찝찝함을 없애기 위해 한번 파이썬으로 Gradient Boosting을 구현해 보았다.

하악...

코드 >>

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

|

import numpy as np

from sklearn.datasets import load_breast_cancer

from sklearn.model_selection import train_test_split

def probability(log_odds: float) -> np.array:

return np.exp(log_odds) / (np.exp(log_odds) + 1)

class GradientBoostingClassifier:

def __init__(self,

X: np.array,

y: np.array,

n_boost: int = 100,

learning_rate: float = 0.1,

dt_max_depth: int = 1,

dt_max_leaf_nodes: int= 3):

y_bin = np.bincount(y)

y_most = np.max(y_bin)

y_less = np.min(y_bin)

self.X = X

self.ans = y

self.n_samples = y.shape[0]

self.init_logit = np.log(y_most / y_less)

self.init_prob = np.zeros([self.n_samples])

self.init_prob[y == 0] = 1 - probability(self.init_logit)

self.init_prob[y == 1] = probability(self.init_logit)

self.init_loss = -(self.ans*np.log(self.init_prob) +\

(1-self.ans)*np.log(1-self.init_prob)).sum()

self.n_boost = n_boost

self.learning_rate = learning_rate

self.dt_max_depth = dt_max_depth

self.dt_max_leaf_nodes = dt_max_leaf_nodes

self.logits = np.zeros([self.n_boost, self.n_samples])

self.residuals = np.zeros([self.n_boost, self.n_samples])

self.loss = np.zeros([self.n_boost])

self.probs = np.zeros([self.n_boost, self.n_samples])

self.preds = np.zeros([self.n_boost, self.n_samples])

self.scores = np.zeros([self.n_boost])

self.gamma = np.zeros([self.n_boost, self.n_samples])

self.gamma_values = np.zeros([self.n_boost, self.n_samples])

self.decision_trees: DecisionTreeRegressor = []

self.logits[0] = [self.init_logit] * self.n_samples

self.probs[0] = self.init_prob

self.loss[0] = self.init_loss

def fit(self) -> None:

for i in range(0, self.n_boost-1):

self.residuals[i] = self.ans - self.probs[i]

dt = DecisionTreeRegressor(max_depth = self.dt_max_depth)

dt = dt.fit(self.X, self.ans)

self.decision_trees.append(dt)

leaf_indices = dt.apply(self.X)

unique_leaves = np.unique(leaf_indices)

n_leaf = len(unique_leaves)

for ileaf in range(n_leaf):

leaf_index = unique_leaves[ileaf]

n_leaf = len(leaf_indices[leaf_indices == leaf_index])

prev_prob = self.probs[i][leaf_indices == leaf_index]

denominator = np.sum(prev_prob * (1 - prev_prob))

nominator = np.sum(self.residuals[i][leaf_indices == leaf_index])

self.gamma_values[i][ileaf] = nominator / (denominator)

self.gamma[i] = [self.gamma_values[i][np.where(unique_leaves == index)] for index in leaf_indices]

self.logits[i+1] = self.logits[i] + self.learning_rate * self.gamma[i]

self.probs[i+1] = np.array([probability(logit) for logit in self.logits[i+1]])

self.preds[i+1] = (self.probs[i+1] > 0.5) * 1.0

self.scores[i+1] = np.sum(self.preds[i+1]==self.ans) / self.n_samples

self.loss[i+1] = np.sum(-self.ans * self.logits[i+1] +\

np.log(1+np.exp(self.logits[i+1])))

def predict(self, X: np.array) -> np.array:

init_logit = [self.init_logit] * len(X)

for i in range(0, self.n_boost-1):

leaf_indices = self.decision_trees[i].apply(X)

unique_leaves = np.unique(leaf_indices)

n_leaf = unique_leaves.shape[0]

gamma = np.ravel(np.array([self.gamma_values[i][np.where(unique_leaves == index)] for index in leaf_indices]))

if i == 0:

logits = init_logit + gamma * self.learning_rate

else:

logits += gamma * self.learning_rate

probs = np.array([probability(logit) for logit in logits])

preds = (probs >= 0.5) * 1.0

return preds

#######################################

############## 테스트 #################

#######################################

data = load_breast_cancer()

X = data.data

y = data.target

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.15, random_state=1)

gbc1 = GradientBoostingClassifier(X_train, y_train, n_boost=100, learning_rate=0.1, dt_max_depth = 1)

gbc1.fit()

preds = gbc1.predict(X_test)

scores = np.mean(preds==y_test)

print(scores)

|

'머신러닝' 카테고리의 다른 글

| 링크, google landmark recognition 설명 (0) | 2021.08.22 |

|---|---|

| 공부기록 - Optimal Bayesian Rule (0) | 2021.06.16 |

| Bias Variance Decomposition and XGBOOST와 RANDOMFOREST 주의사항 (0) | 2021.06.10 |

| Python ADA boost Binary Classification 바닥부터 구현해보기 (0) | 2021.06.10 |

| 파이썬 VIT - Vision Transformer 텐서플로우 코랩 구현 (2) | 2021.06.06 |